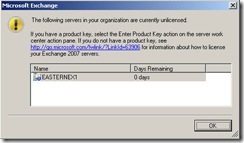

Yesterday I fired up an old Exchange Server 2007 VM lab environment. The first thing I saw when I started the Exchange Management Console (EMC) was a pop-up message saying, “The following servers in your organization are currently unlicensed”, as shown below.

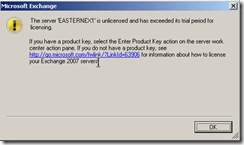

After clicking OK, I got the another pop-up message saying, “The server ‘<server_name>’ is unlicensed and has exceeded its trial period for licensing.

Fair enough, it had been a long time since I had installed the test environment. After obtaining a valid license key from MSDN I went back to the EMC to update the product key. The only problem was I couldn’t find the option anywhere! I found the following information on Technet:

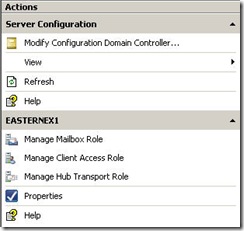

“Open the Exchange Management Console.

- In the console tree, expand Server Configuration.

- In the result pane, select the server that you want to license.

- In the action pane, under the server name, click Enter Product Key. The Enter Product Key wizard appears.

- On the Enter Product Key page, type the product key for the Exchange server, and then click Enter.”

Even with these instructions I still couldn’t find the option.

Then it struck me that I was using the 32-bit version of Exchange Server 2007, which clearly can’t be licensed because it is not supported for production use. Doh!

After some further digging I even found wording in the Help documentation that talks about entering the product key:

“Note:

This action is available only if you installed the 64-bit version of Exchange 2007″

RTFM anyone? 🙂

Because you cannot license a 32-bit version of Exchange Server 2007 your options are to either re-install the trial version or to live with the warning messages. Interestingly the license warnings are just that – i .e. they do not impede or remove functionality.

I thought I would blog about this to save others from going through the same time-wasting process 🙂

I have the 32 bit version to … so your saying that when it “expires” i can still use it like before, except that it will have an annoying message? SWEET!! Thanks

Yes, that’s right. All the functionality is still there – you just get the annoying message.

Thanks for the information, I am too struggling with the same problem, When i didn’t get the option to register E2K7/32 from EMC then I tried from EMS with (Set-ExchangeServer -Identity ExServer01 -ProductKey aaaaa-aaaaa-aaaaa-aaaaa-aaaaa)cmdlet but no luck finally got your blog.

Thanks once again for the blog, I appriciate it !!

Wish I’d found this article sooner! Well done for confirming what I’d experienced, good to see a proper explanation

I’m GLaD to have found this information on your blog. This surely kept a few handfuls of hair on my head.

Thanks for the blog. I had the same issue but before expiration as I was just going to enter a product key.

RTFM doesn’t apply – the little dialog that comes up that tells you about the product key should either (A) be dynamic, detect you’re running 32, and not even mention a product key and/or explain that one isn’t needed due to the limitation, or (B) have an additional sentence IN THAT DIALOG that tells you that if you’re running it in 32 bit mode, a product key is not required.

Thanks for the blog. I had the same issue but before expiration as I was just going to enter a product key.

RTFM doesn’t apply – the little dialog that comes up that tells you about the product key should either (A) be dynamic, detect you’re running 32, and not even mention a product key and/or explain that one isn’t needed due to the limitation, or (B) have an additional sentence IN THAT DIALOG that tells you that if you’re running it in 32 bit mode, a product key is not required.

Yes, thanks for this Blog,

Yet another confusing and some would say stupid way to do things from Microsoft. They sent me a disk with a Product Key on it, then they hide the option to enter it. I will say no more, perhaps a big message should appear to say ‘YOU CANT REGISTER THE 32Bit VERSION’ so don’t waste hours trying to do so. Cheers for the info.

Yes, thanks for this Blog,

Yet another confusing and some would say stupid way to do things from Microsoft. They sent me a disk with a Product Key on it, then they hide the option to enter it. I will say no more, perhaps a big message should appear to say ‘YOU CANT REGISTER THE 32Bit VERSION’ so don’t waste hours trying to do so. Cheers for the info.

Yes, thank you! We have the 64-bit but were having issues getting drivers for student laptops to run Server ’08 for Exchange ’07 and were forced to install this on Server 30 in stead! THANK YOU for saving me further time looking for how to enter the product key in this version!

Yes, thank you! We have the 64-bit but were having issues getting drivers for student laptops to run Server ’08 for Exchange ’07 and were forced to install this on Server 30 in stead! THANK YOU for saving me further time looking for how to enter the product key in this version!

Those who wish get rid of that naggin product key pop up. Install a window 64bit machine along with exchange 2007 64bits management console. Enter the product key using 64bits management console and get rid of that irritating product key popup message.

in my case, it no longer works. I have had it in this “trial” mode for 1 year and now my Exchange services keep disabling themselves. I can no longer send email, etc. It’s a pain!